We will focus on multi-container deployment by creating an application that calculates Fibonacci sequence.

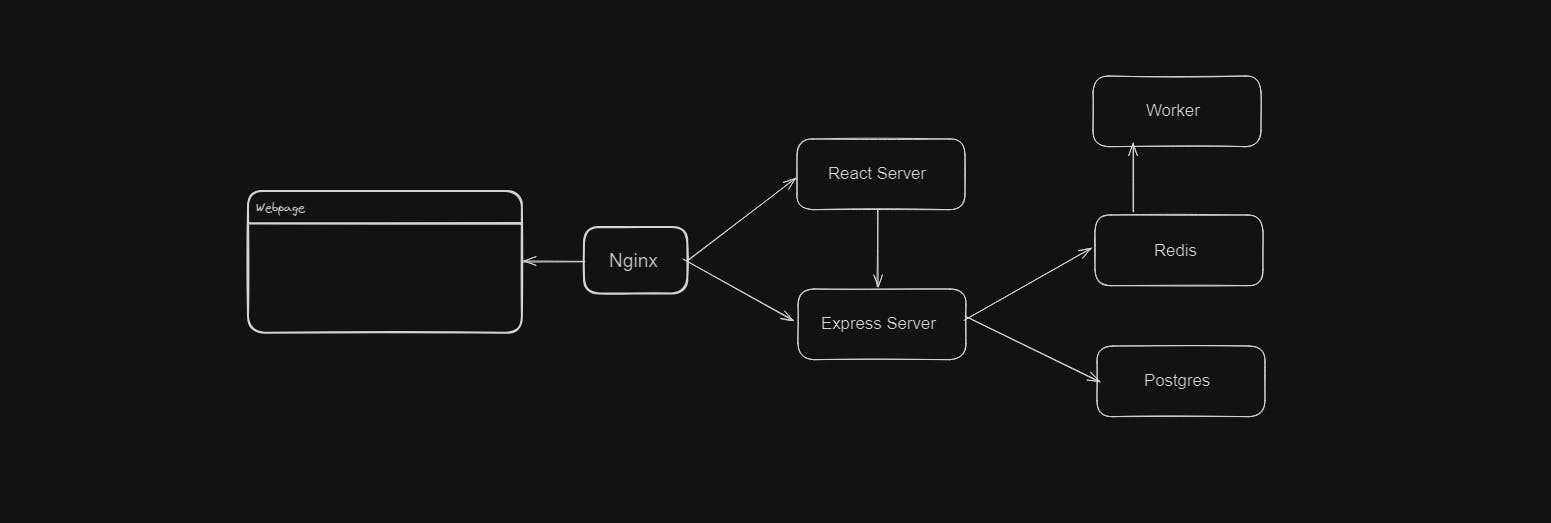

This is the development flow of our application. First the request will be sent to Nginx server. If the browser is asking for static files Nginx routes it to the React server. If the browser wants to access some backend API it will be routed to the Express server.

Redis is an in-memory data store. It can store data in cache. All the values submitted will be stored in Postgres Database.

Project Setup

First create a project directory. Then inside that directory create a folder named Worker.

Create a package.json file inside worker. Add all the dependencies required.

{

"dependencies": {

"nodemon": "1.18.3",

"redis": "2.8.0"

},

"scripts": {

"start": "node index.js",

"dev": "nodemon"

}

}

Then create an index.js file to write our primary logic and to connect to Redis server.

const keys= require('./keys');

const redis=require('redis');

const redisClient= redis.createClient({

host: keys.redisHost,

port: keys.redisPort,

retry_strategy: ()=> 1000

});

const sub= redisClient.duplicate();

function fib(index){

if(index < 2){

return 1;

}

return fib(index-1) + fib(index-2);

}

sub.on('message', (channel, message)=>{

redisClient.hset('values', message, fib(parseInt(message)));

});

sub.subscribe('insert');

This section imports the necessary modules.

Here, a Redis client is created using the configuration details from keys. retry_strategy is a function that will be called when a connection attempt fails. In this case, it waits for 1000 milliseconds (1 second) before attempting to reconnect.

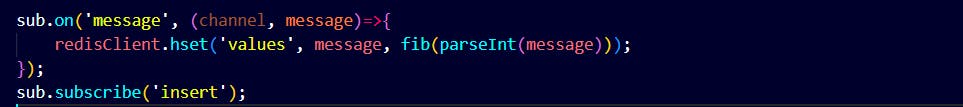

A subscriber instance is created by duplicating the original client. This instance is used to listen for messages on a specific Redis channel.

This is a simple recursive Fibonacci function.

The subscriber listens for messages on the 'insert' channel. When a message is received, the fib function is applied to the message and the result is stored in a Redis hash under the 'values' key.

(keys.js)

module.exports={

redisHost: process.env.REDIS_HOST,

redisPort: process.env.REDIS_PORT

};

Then create another folder for server.

(package.json)

{

"dependencies": {

"express": "4.16.3",

"pg": "8.0.3",

"redis": "2.8.0",

"cors": "2.8.4",

"nodemon": "1.18.3",

"body-parser": "*"

},

"scripts": {

"dev": "nodemon",

"start": "node index.js"

}

}

(Keys.js)

module.exports={

redisHost: process.env.REDIS_HOST,

redisPort: process.env.REDIS_PORT,

pgUser: process.env.PGUSER,

pgHost: process.env.PGHOST,

pgDatabase: process.env.PGDATABASE,

pgPassword: process.env.PGPASSWORD,

pgPort: process.env.PGPORT

};

(index.js)

const keys = require('./keys');

const express= require('express');

const bodyParser=require('body-parser');

const cors=require('cors');

const app=express();

app.use(cors());

app.use(bodyParser.json())

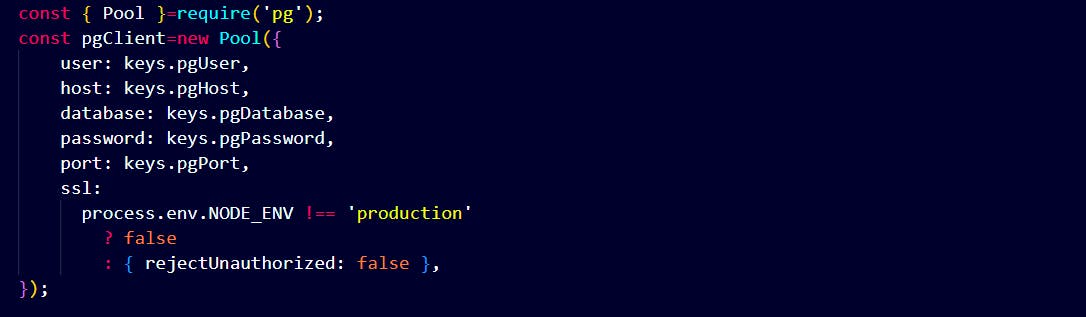

//POSTGRES SETUP

const { Pool }=require('pg');

const pgClient=new Pool({

user: keys.pgUser,

host: keys.pgHost,

database: keys.pgDatabase,

password: keys.pgPassword,

port: keys.pgPort,

ssl:

process.env.NODE_ENV !== 'production'

? false

: { rejectUnauthorized: false },

});

pgClient.on('connect',(client)=>{

client

.query('CREATE TABLE IF NOT EXISTS values (number INT) ')

.catch((err)=>console.error(err));

});

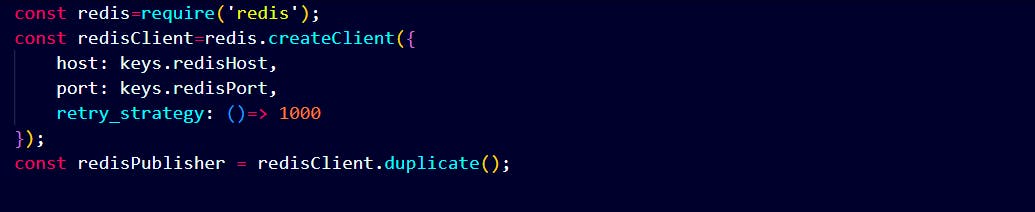

//REDIS SETUP

const redis=require('redis');

const redisClient=redis.createClient({

host: keys.redisHost,

port: keys.redisPort,

retry_strategy: ()=> 1000

});

const redisPublisher = redisClient.duplicate();

//EXPRESS HANDLERS

app.get('/', (req,res)=>{

res.send('hi');

});

app.get('/values/all', async(req,res)=>{

const values=await pgClient.query('SELECT * from VALUES');

res.send(values.rows);

});

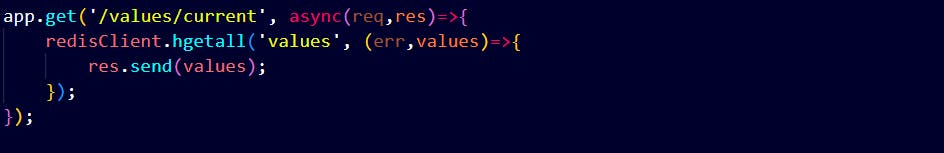

app.get('/values/current', async(req,res)=>{

redisClient.hgetall('values', (err,values)=>{

res.send(values);

});

});

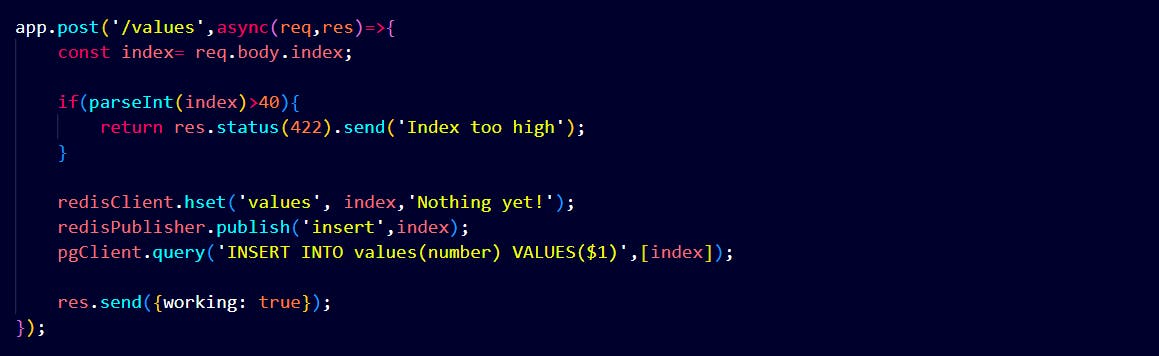

app.post('/values',async(req,res)=>{

const index= req.body.index;

if(parseInt(index)>40){

return res.status(422).send('Index too high');

}

redisClient.hset('values', index,'Nothing yet!');

redisPublisher.publish('insert',index);

pgClient.query('INSERT INTO values(number) VALUES($1)',[index]);

res.send({working: true});

});

app.listen(5000, err=>{

console.log('Listening');

});

This section imports necessary modules.

An Express application is created (app), and middleware functions (cors and body-parser) are applied to handle cross-origin requests and parse JSON request bodies, respectively.

A PostgreSQL client (pgClient) is created using the pg library. The connection details are obtained from the keys module. The ssl property is conditionally set based on the environment, allowing for a more permissive SSL configuration in non-production environments.

The on('connect') event handler is used to execute a query when the PostgreSQL client connects. In this case, it tries to create a table named 'values' with a single column 'number' of type INT if it doesn't already exist. The CREATE TABLE IF NOT EXISTS statement ensures that the table is only created if it doesn't already exist.

Here, a Redis client (redisClient) is created using the configuration from the keys module. Additionally, a publisher (redisPublisher) is created by duplicating the original client. This publisher will be used to publish messages to the 'insert' channel.

A basic route handler for the root path that responds with 'hi'.

A route handler for retrieving all values from the PostgreSQL database. It executes a SELECT query on the 'values' table and responds with the rows.

A route handler for retrieving the current values from Redis. It uses the hgetall method to retrieve all fields and values from the 'values' hash.

A route handler for handling POST requests to add a new value. It first checks if the provided index is greater than 40; if so, it returns an error response. Otherwise, it updates the 'values' hash in Redis with the new index and a placeholder value, publishes a message on the 'insert' channel, and inserts the new index into the 'values' table in PostgreSQL.

The server listens on port 5000 for incoming requests.

Next we will create our React application

(App.js)

import React from "react";

import logo from "./logo.svg";

import "./App.css";

import { BrowserRouter as Router, Route, Link } from "react-router-dom";

import OtherPage from "./OtherPage";

import Fib from "./Fib";

function App() {

return (

<Router>

<div className="App">

<header className="App-header">

<img src={logo} className="App-logo" alt="logo" />

<p></p>

<a

className="App-link"

href="https://reactjs.org"

target="_blank"

rel="noopener noreferrer"

>

Learn React

</a>

<Link to="/">Home</Link>

<Link to="/otherpage">Other Page</Link>

</header>

<div>

<Route exact path="/" component={Fib} />

<Route path="/otherpage" component={OtherPage} />

</div>

</div>

</Router>

);

}

export default App;

(Fib.js)

import React, { Component } from 'react';

import axios from 'axios';

class Fib extends Component {

state = {

seenIndexes: [],

values: {},

index: '',

};

componentDidMount() {

this.fetchValues();

this.fetchIndexes();

}

async fetchValues() {

const values = await axios.get('/api/values/current');

this.setState({ values: values.data });

}

async fetchIndexes() {

const seenIndexes = await axios.get('/api/values/all');

this.setState({

seenIndexes: seenIndexes.data,

});

}

handleSubmit = async (event) => {

event.preventDefault();

await axios.post('/api/values', {

index: this.state.index,

});

this.setState({ index: '' });

};

renderSeenIndexes() {

return this.state.seenIndexes.map(({ number }) => number).join(', ');

}

renderValues() {

const entries = [];

for (let key in this.state.values) {

entries.push(

<div key={key}>

For index {key} I calculated {this.state.values[key]}

</div>

);

}

return entries;

}

render() {

return (

<div>

<form onSubmit={this.handleSubmit}>

<label>Enter your index:</label>

<input

value={this.state.index}

onChange={(event) => this.setState({ index: event.target.value })}

/>

<button>Submit</button>

</form>

<h3>Indexes I have seen:</h3>

{this.renderSeenIndexes()}

<h3>Calculated Values:</h3>

{this.renderValues()}

</div>

);

}

}

export default Fib;

(OtherPage.js)

import React from "react";

import { Link } from "react-router-dom";

const OtherPage = () => {

return (

<div>

Im some other page!

<Link to="/">Go back home</Link>

</div>

);

};

export default OtherPage;

Now we have 3 folders in the application

React App

Server

Worker

We need to make a Docker dev for each.

Dockerizing a React App

Create Dockerfile.dev in the client directory

FROM node:16-alpine

WORKDIR '/app'

COPY ./package.json ./

RUN npm install

COPY . .

CMD ["npm", "run", "start"]

Create Dockerfile.dev in the server directory.

FROM node:14.14.0-alpine

WORKDIR "/app"

COPY ./package.json ./

RUN npm install

COPY . .

CMD ["npm", "run", "dev"]

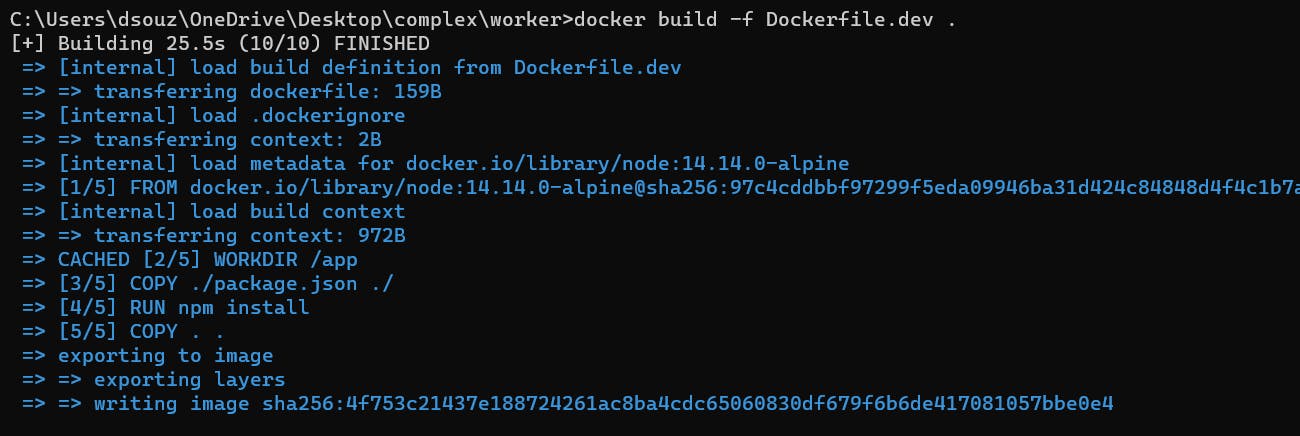

Create Dockerfile.dev in the worker directory

FROM node:14.14.0-alpine

WORKDIR "/app"

COPY ./package.json ./

RUN npm install

COPY . .

CMD ["npm", "run", "dev"]

Then build each of these docker files.

Run each of these files accordingly.

Now create the docker-compose.yml file

we need to define:

postgres - Which image is to be used?

redis - Which image is to be used?

server -

a. Specify build

b. Specify volumes

c. Specify env variables

version: '3'

services:

postgres:

image: 'postgres:latest'

redis:

image: 'redis:latest'

api:

build:

dockerfile: Dockerfile.dev

context: ./server

volumes:

- /app/node_modules

- ./server:/app

environment:

- REDIS_HOST=redis

- REDIS_PORT=6379

- PGUSER=postgres

- PGHOST=postgres

- PGDATABASE=postgres

- PGPASSWORD=postgres_password

- PGPORT=5432

client:

build:

dockerfile: Dockerfile.dev

context: ./client

volumes:

- /app/node_modules

- ./client:/app

worker:

build:

dockerfile: Dockerfile.dev

context: ./worker

volumes:

- /app/node_modules

- ./worker:/app

environment:

- REDIS_HOST=redis

- REDIS_PORT=6379

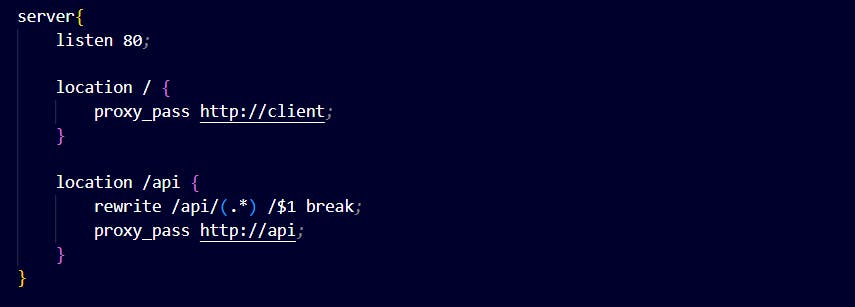

Nginx Path Routing

After Nginx routes the /api/ requests it chops it out so that express server can watch for it.

create a folder for nginx. Create a file named 'default.conf'.

upstream client {

server client:3000;

}

upstream api {

server api:5000;

}

server{

listen 80;

location / {

proxy_pass http://client;

}

location /api {

rewrite /api/(.*) /$1 break;

proxy_pass http://api;

}

}

These upstream blocks define groups of servers that can handle requests. In this case, there are two upstreams named client and api. The client upstream consists of a single server (client:3000), and the api upstream consists of a single server (api:5000).

This block defines the main configuration for the server and specifies that it should listen on port 80.

Location block handles requests to the root URL (/). It uses the proxy_pass directive to forward requests to the client upstream, which in turn directs the request to the server at client:3000.

Next location block handles requests to the /api path. The rewrite directive is used to strip the /api prefix from the URI before passing it to the api upstream. The modified URI is then forwarded to the server at api:5000 using the proxy_pass directive.

Building Custom Nginx Image

Create a Dockerfile within the same directory.

FROM nginx

COPY ./default.conf /etc/nginx/conf.d/default.conf

Then add the Nginx service to docker-compose file.

version: '3'

services:

postgres:

image: 'postgres:latest'

redis:

image: 'redis:latest'

nginx:

restart: always

build:

dockerfile: Dockerfile.dev

context: ./nginx

ports:

- '3050:80'

api:

build:

dockerfile: Dockerfile.dev

context: ./server

volumes:

- /app/node_modules

- ./server:/app

environment:

- REDIS_HOST=redis

- REDIS_PORT=6379

- PGUSER=postgres

- PGHOST=postgres

- PGDATABASE=postgres

- PGPASSWORD=postgres_password

- PGPORT=5432

client:

build:

dockerfile: Dockerfile.dev

context: ./client

volumes:

- /app/node_modules

- ./client:/app

worker:

build:

dockerfile: Dockerfile.dev

context: ./worker

volumes:

- /app/node_modules

- ./worker:/app

environment:

- REDIS_HOST=redis

- REDIS_PORT=6379

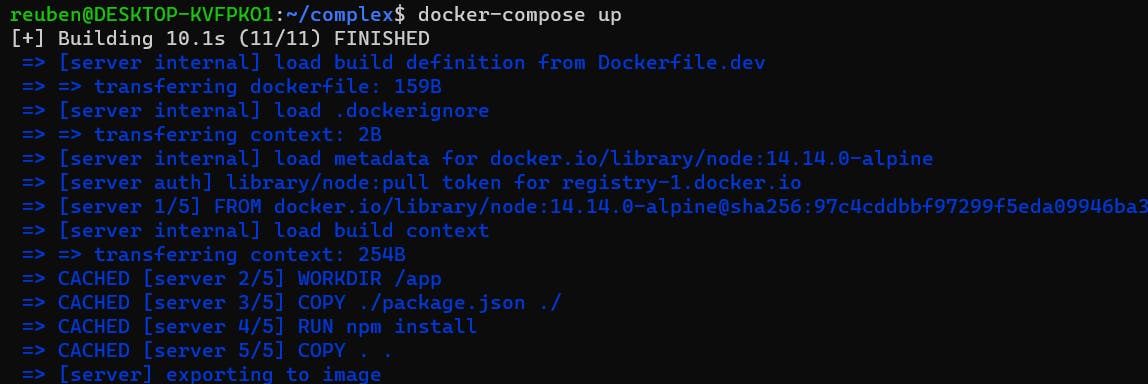

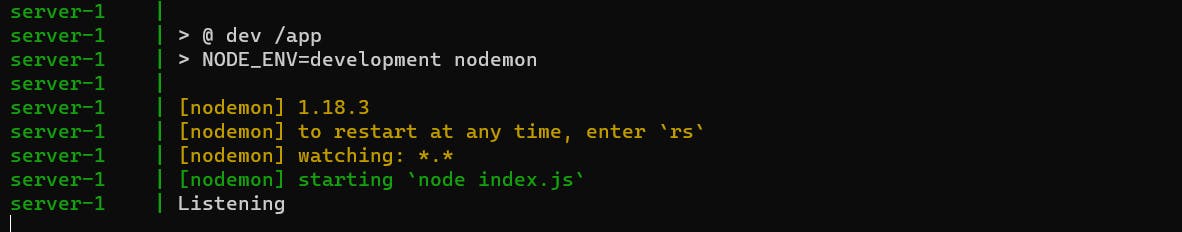

Next we run the docker-compose up command to get all the services running.

And we see this

Solving Web Sockets Error

(default.conf)

upstream client {

server client:3000;

}

upstream api {

server api:5000;

}

server {

listen 80;

location / {

proxy_pass http://client;

}

location /api {

rewrite /api/(.*) /$1 break;

proxy_pass http://api;

}

location /ws {

proxy_pass http://client;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

}

}

(docker-compose.yml)

version: "3"

services:

postgres:

image: "postgres:latest"

environment:

- POSTGRES_PASSWORD=postgres_password

redis:

image: "redis:latest"

nginx:

depends_on:

- api

- client

restart: always

build:

dockerfile: Dockerfile.dev

context: ./nginx

ports:

- "3050:80"

api:

build:

dockerfile: Dockerfile.dev

context: ./server

volumes:

- /app/node_modules

- ./server:/app

environment:

- REDIS_HOST=redis

- REDIS_PORT=6379

- PGUSER=postgres

- PGHOST=postgres

- PGDATABASE=postgres

- PGPASSWORD=postgres_password

- PGPORT=5432

client:

build:

dockerfile: Dockerfile.dev

context: ./client

volumes:

- /home/node/app/node_modules

- ./client:/home/node/app

environment:

- WDS_SOCKET_PORT=0

worker:

build:

dockerfile: Dockerfile.dev

context: ./worker

volumes:

- /app/node_modules

- ./worker:/app

environment:

- REDIS_HOST=redis

- REDIS_PORT=6379